Complete guide on deploying a Docker application (React) to AWS Elastic Beanstalk using Docker Hub and Github Actions

I recently went through the struggle of setting up a pipeline for deploying a dockerized react app to Beanstalk and the whole process has left me with a new found respect for the magicians we sometimes take for granted, dev-ops engineers.

In this article I will go over the process I used to deploy a Docker image to beanstalk using Docker Hub for hosting the image and GitHub Actions for building and orchestrating the whole process. In my journey I discovered that there are multiple ways of achieving this and there really isn't a "best" approach for all use cases. However, my familiarity with beanstalk and fear of getting started with a new technology like ECS was the main motivation behind using this approach. So if you're anything like me keep reading.

I have broken the whole process into smaller, independent (somewhat) steps that give a clear, high-level picture of the entire pipeline from setting up Docker to having the image running on Beanstalk.

Pipeline Steps

- Create necessary accounts

- Dockerizing your application

- Building the image on Github using Actions and pushing to Docker Hub

- Deploying Docker Hub image to AWS Elastic Beanstalk

- Making Docker repository private (Optional)

Create necessary accounts

Let's sign up for all the services that we'll need for this setup.

- Github

- Docker Hub

- Amazon Web Services (AWS)

Dockerizing your application

Why Docker?

Why use Docker? Good question. In my opinion it's the closest you can be to sharing a single "machine" for development with your all your peers. If this answer doesn't appeal to you then I sincerely urge you to read more on this topic as there are plenty of articles written by more qualified developers talking about why you should use Docker and how it can make your life easier.

Setting Up Docker

Now that you're convinced, let's go over the docker configurations. For the purpose of this article, I'm going to assume that you already have a basic react (or any other) application set up with docker that you can start by building the image and running the container. If you don't have it set up then you can start with create-react-app and then add docker manually or clone a boilerplate like this one.

Here's what the Dockerfile for my react application looks like:

# Dockerfile

# pull official base image

FROM node:13.12.0-alpine

# set working directory

WORKDIR /app

# add `/app/node_modules/.bin` to $PATH

ENV PATH /app/node_modules/.bin:$PATH

# install app dependencies

COPY package.json ./

COPY package-lock.json ./

RUN npm install

# start app

CMD ["npm", "run", "start"]

# expose port

EXPOSE 3000

Now that you have a dockerized application, let's create docker configurations for production server which uses nginx reverse-proxy web server (I named it Dockerfile.prod).

# Dockerfile.prod

# build environment

FROM node:13.12.0-alpine as build

WORKDIR /app

ENV PATH /app/node_modules/.bin:$PATH

COPY package.json ./

COPY package-lock.json ./

RUN npm ci

COPY . ./

RUN npm run build

# production environment

FROM nginx:stable-alpine

COPY --from=build /app/build /usr/share/nginx/html

# to make react-router work with nginx

COPY nginx/nginx.conf /etc/nginx/conf.d/default.conf

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

Note: If you're not sure why we use nginx, then I highly recommend reading more about it.

This basically creates an image for my application and runs the build application script. It then copies the files generated by the build script (npm run build) to the nginx image under the /usr/share/nginx/html location, exposes port 80 and starts the server.

Here's an optional docker-compose file that I use along with the Dockerfile to build & test build script code in local. I use it by running docker compose -f docker-compose.prod.yml up --build and then going to localhost:80 in my browser.

You don't need this in order to continue with this tutorial.

# docker-compose.prod.yml

version: '3.8'

services:

frontend-prod:

container_name: frontend-prod

build:

context: .

dockerfile: Dockerfile.prod

ports:

- '80:80'

Building the Image on Github Using Actions and Pushing to Docker Hub

Now let's set up Github Actions to build the production docker image whenever you push code to a branch and then deploy that image to Docker Hub. I'm assuming that you have already set up a github account and are able to push code to your repo. If you haven't done that yet, then you should stop here, create a github account, push your code to a new repo and then come back.

Github Actions YAML File

Github Actions works by reading a .github/workflows/.yml file in your codebase for instructions. It uses the basic YAML syntax which is human friendly and should be fairly easy to get follow. Let's create this YAML file from Github's UI and add some basic steps.

We're going to add the following content to the file:

name: Dev deployment from Github to AWS

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout Latest Repo

uses: actions/checkout@master

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1

- name: Login to DockerHub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_PASSWORD }}

- name: Build and push

uses: docker/build-push-action@v2

with:

context: .

file: Dockerfile.prod

push: true

tags: <your repo name>/<your application name>:latest

Here we're setting the name of the action which you can see on line 1. Then we say that on push event on main branch we're going to run the jobs defined below. Next we define the deploy job which runs on ubuntu-latest machine. Then we define the follwing steps:

- Use

actions/checkout@masteraction to checkout themainbranch - Use

docker/setup-buildx-action@v1action to set up Buildx, the tool we'll use to push the image to Docker Hub - Use

docker/login-action@v1action to authenticate with Docker Hub. We'll set secrets object will in the repository settings on Github in the next step. - Use

docker/build-push-action@v2action to build the image usingDockerfile.prodfile and then push it as/on Docker Hub with thelatesttag.

Now that we have added these steps to the main.yml file, let's commit the changes and go back to our local machine and pull the latest.

Github Secrets

Now let's create the secrets in our Github repo.

Use the same process to add the DOCKERHUB_PASSWORD secret.

Running the Action

Now that we have everything set up, let's make some minor code changes in the main branch and push. Once you do, you should be able to navigate to the actions tab on Github and see the deploy action running. It should look something like this.

Once the action is complete, open your Docker Hub account and make sure that the image was pushed succesfully.

Deploying Docker Hub image to AWS Elastic Beanstalk

In this section we're going to set up AWS to deploy the Docker Hub image to Elastic Beanstalk and have our application available on the world wide web! We'll achieve this by sending instructions to beanstalk from Github Actions to pull and run the image from Docker Hub.

Setting Up AWS

Before getting started, you should complete creating an account on AWS and then setting up payments, etc. to be able to create a Beanstalk application.

Creating Beanstalk Admin User for Deployments

Follow these steps to create an IAM user with programatic access that we'll use to deploy packages to our Beanstalk application from Github Actions:

- Navigate to IAM

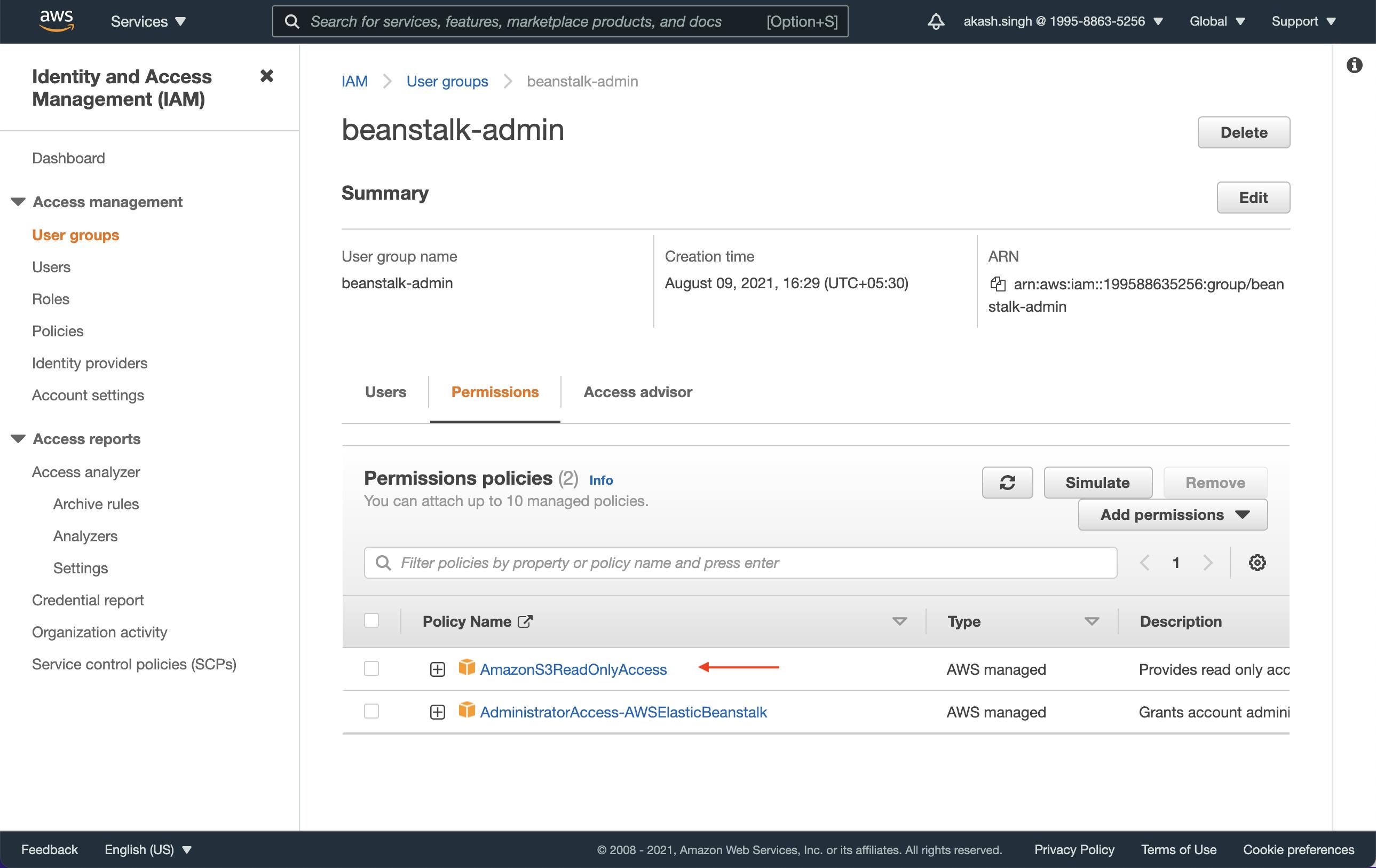

- Create a new Group (We'll call it

beanstalk-adminhere) - Add the

AdministratorAccess-AWSElasticBeanstalkpermission to thebeanstalk-admingroup

It should look something like this:

Ignore the other permission for now.

- Create a new user called

github-deployment-user - Give Programatic Access to this user and add it to the

beanstalk-admingroup - Copy the

Access key IDand theSecret access key. We're going to need these later

Create Beanstalk Application

Let's create a new Beanstalk application that we'll deploy to. Navigate to Elastic Beanstalk, click Create Application, name the application, and then set the platform as Docker and leave everything else as default.

Now that we have everything set up on AWS, let's create the instructions file that will tell beanstalk to pull & run the right image from Docker Hub.

Create Dockerrun.aws.json

Beanstalk can work with docker-compose or Dockerrun.aws.json for getting instructions on what image to deploy but to keep things simple and set us up for the last step of this pipeline we're going to be using the Dockerrun.aws.json file. You should create this file at the root of your project folder.

// Dockerrun.aws.json

{

"AWSEBDockerrunVersion": "1",

"Image": {

"Name": "<your repo name>/<your application name>:latest",

"Update": "true"

},

"Ports": [

{

"ContainerPort": "80"

}

],

"Logging": "/var/log/nginx"

}

Here we're telling beanstalk to pull /:latest image and then expose PORT 80 of the container.

Update Github Actions to Send Instructions to Beanstalk

Now we're going to update the Github Actions YAML file we added earlier. Let's add the following steps to our existing deploy job:

# .github/workflows/main.yml continued

- name: Get Timestamp

uses: gerred/actions/current-time@master

id: current-time

- name: Run String Replace

uses: frabert/replace-string-action@master

id: format-time

with:

pattern: '[:\.]+'

string: "${{ steps.current-time.outputs.time }}"

replace-with: '-'

flags: 'g'

- name: Generate Deployment Package

run: zip -r deploy.zip * -x "**node_modules**"

- name: Deploy to EB

uses: einaregilsson/beanstalk-deploy@v16

with:

aws_access_key: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws_secret_key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

application_name: <beanstalk application name>

environment_name: <beanstalk environment name>

version_label: "docker-app-${{ steps.format-time.outputs.replaced }}"

region: us-west-2

deployment_package: deploy.zip

Here we're adding the following steps:

- Get the current timestamp (this is for tracking the version on beanstalk)

- Use action

frabert/replace-string-action@masterto replace:with-in the timestamp string (optional) - Create a zipped package

deploy.zipof our codebase excludingnode_modulesfolder. Note: We're doing this to send the Dockerrun.aws.json which is at the root of our project to beanstalk. - Use action

einaregilsson/beanstalk-deploy@v16to push the zip to beanstalk. Make sure that you set the right values forapplication_name,environment_name, andregion

As you might have guessed looking at the steps, we'll need to add AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY secret keys to our Github repository. AWS_ACCESS_KEY_ID is the Access Key ID and AWS_SECRET_ACCESS_KEY is the Secret access key for the github-deployment-user that we created on step 6 in the Creating Beanstalk Admin User for Deployments secton.

Now that you have added the secrets to the Github repo, go ahead and commit & push the updated main.yml and the newly added Dockerrun.aws.json files. This should start a new deploy job under the Actions tab with the commit message as the title. Expand the job to make sure that you see the new steps that you added to your main.yml file.

Once the job completes, and if everything goes well, you should have your application deployed successfully in beanstalk and you should be able to access it by going to the beanstalk instance's public URL.

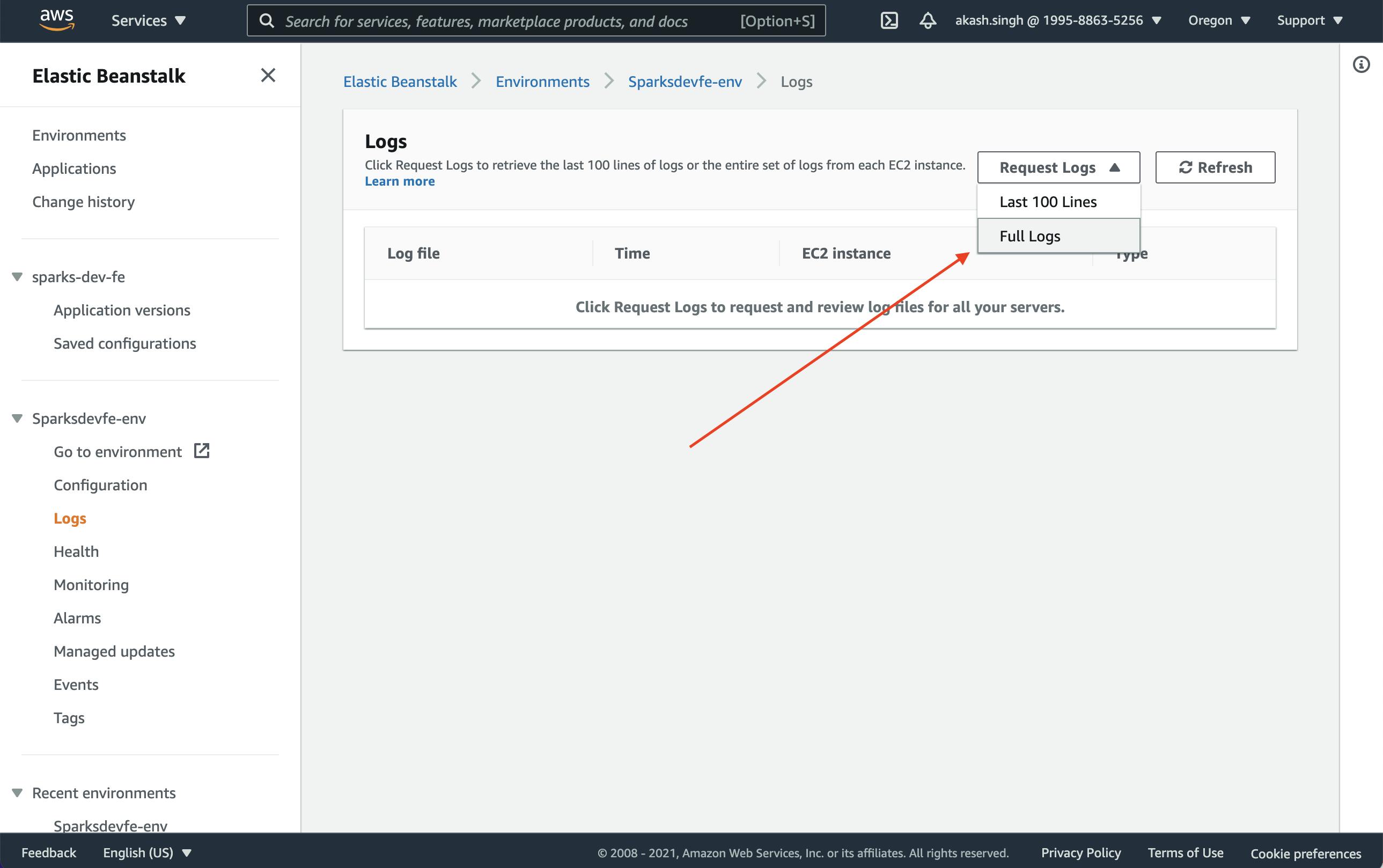

Note: If some thing breaks on AWS and you see the health of you application is red, then go to the Logs tab and download full logs. Unzip the package and look at eb-engine.log file. You should be able to find what went wrong by searching for the [ERROR] line in there.

Making Docker repository private (Optional)

Now let's talk about how we can make our Docker Hub repository private. So far our application was publicly available on Docker, meaning that anybody will be able to find and pull this image from Docker Hub. In this section we'll go over making the Docker repo private and authorizing our beanstalk instance to be able to pull the image from Docker Hub.

I struggled a bit with this part due to outdated documentation on Amazon's site and not having any recent answers from the community on Stackoverflow.

Here's basically what we need to do:

- Make the Docker repository private by going to Docker Hub and changing the settings.

- Create an authorization token and save it in a file that beanstalk can read.

- Save that file on S3 so that our

github-deployment-usercan access it during deployment. - Update the

Dockerrun.aws.jsonfile to use the autorization token we created on step 2.

Making Docker Repo Private

This is pretty straight forward. You go to Docker Hub, then find the repository, go to settings and make it private. Docker gives you 1 free private repository with each Docker Hub account.

Getting the Authorization Token and Saving in a File

We'll call this file dockercfg because that is what the documentaiton keeps calling it but feel free to name it anything you want, like my-nightmare-file. This is what the contents of the file should look like:

{

"auths": {

"https://index.docker.io/v1/": {

"auth": "<your auth token>"

}

}

}

Now the easiest way to create this file is by running the command docker login in your terminal and then copying the auths object from config.json file stored in ~/.docker/ folder (Windows folk, please Google the location). Now the problem here is that on macOS you'll see something like this:

{

"auths": {

"https://index.docker.io/v1/": {}

},

"credsStore": "desktop",

"experimental": "disabled",

"stackOrchestrator": "swarm"

}

This is because docker is using your keychain api to securely store the auth token instead of writing it to a file. Which is great, until you need the token. But thanks to the power of Stackoverflow, I learned that you can generate the authorization string by running this in your terminal:

echo -n '<docker hub username>:<docker hub password>' | base64

Once you have this, create the dockercfg file as above (with the auth token) and save it on your computer. We're going to update some AWS configurations and upload it to S3 next.

Uploading dockercfg to S3

Now you need to upload this file to S3 bucket that is in the same region as our beanstalk application and add S3 read access to our github-deployment-user IAM user so that the beanstalk application can read the file.

AWS Setup

To add permission to our user, go to IAM then go to user groups, select the beanstalk-admin group and add the AmazonS3ReadOnlyAccess permission.

Uploading to AWS

Now let's go to S3 and create a new bucket. We'll call it docker-login-bucket but you can call it anything you like. Make sure you uncheck the Block all public traffic option. Once the bucket is created we'll upload the dockercfg file which we created in the previous step. On the Upload page, after you select the file expand the Permissions section and select Specify Individual ACL Permissions and after that enable both Read access for the third option, Authenticated User Groups. This will allow our beanstalk user to read the contents of this file.

Updating Dockerrun.aws.json

Now we need to tell beanstalk that our Docker repository is private and point it to the dockercfg file so that it can use the authorization token when pulling the image from Docker Hub.

We'll add an authentication object to the Dockerrun.aws.json file which will point to the dockercfg file in S3.

"Authentication": {

"bucket": "docker-login-bucket",

"key": "dockercfg"

},

After adding this, the Dockerrun.aws.json file should look like this

{

"AWSEBDockerrunVersion": "1",

"Authentication": {

"bucket": "docker-collaborationteamsad-auth",

"key": "dockercfg"

},

"Image": {

"Name": "collaborationteamsad/sparks-dev-fe:latest",

"Update": "true"

},

"Ports": [

{

"ContainerPort": "80"

}

],

"Logging": "/var/log/nginx"

}

Now that we've updated the Dockerrun.aws.json file, let's push the code Github and check the new deploy action that gets created. If everything was set up correctly then the pipeline should complete successfully and you should be able to see your application running on the beanstalk application URL.

Congratulations! You have successfully set up a pipeline to build a Docker image using Github Actions, store it in a private repository on Docker Hub and then deployed it on an AWS Elastic Beanstalk application. Every push to the main branch should now successfully deploy your code. 🎉

Update - 8/28/2021

If you want to use docker-compose.yml instead of Dockerrun.aws.json for deploying then follow the steps provided here.